VR.net

Introduction

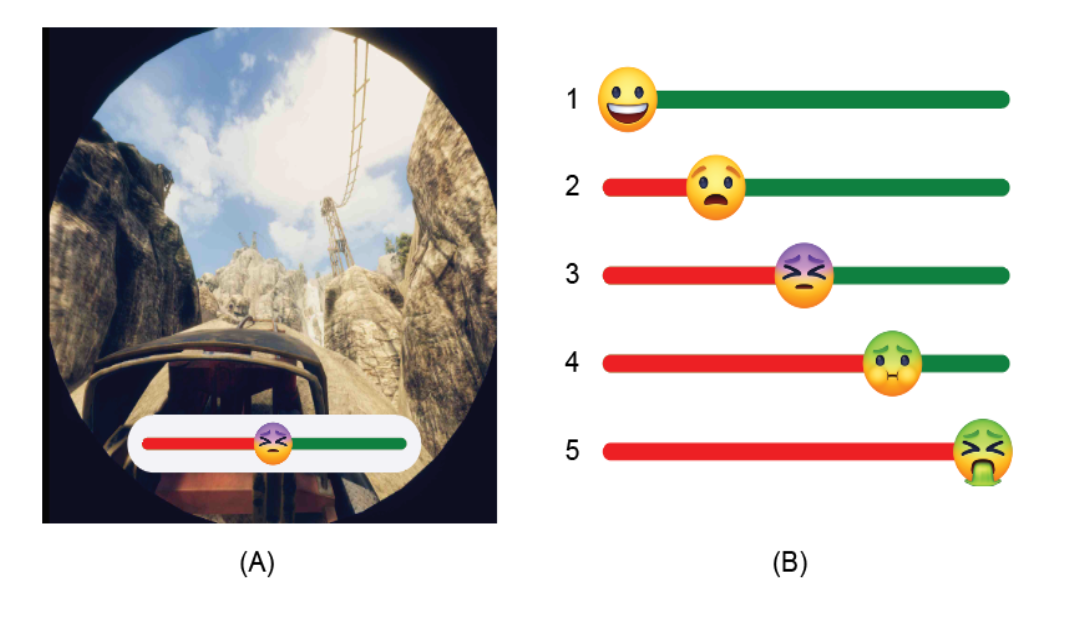

Researchers have used machine learning approaches to identify motion sickness in VR experience. These approaches demand an accurately-labeled, real-world, and diverse dataset for high accuracy and generalizability. As a starting point to address this need, we introduce ‘VR.net’, a dataset offering approximately 12-hour gameplay videos from ten real-world games in 10 diverse genres. For each video frame, a rich set of motion sicknessrelated labels, such as camera/object movement, depth field, and motion flow, are accurately assigned. Building such a dataset is challenging since manual labeling would require an infeasible amount of time. Instead, we utilize a tool to automatically and precisely extract ground truth data from 3D engines’ rendering pipelines without accessing VR games’ source code. We illustrate the utility of VR.net through several applications, such as risk factor detection and sickness level prediction. We continuously expand VR.net and envision its next version offering 10X more data than the current form. We believe that the scale, accuracy, and diversity of VR.net can offer unparalleled opportunities for VR motion sickness research and beyond.

Dataset Format

For each game frame, our dataset provides the following types of data.

-

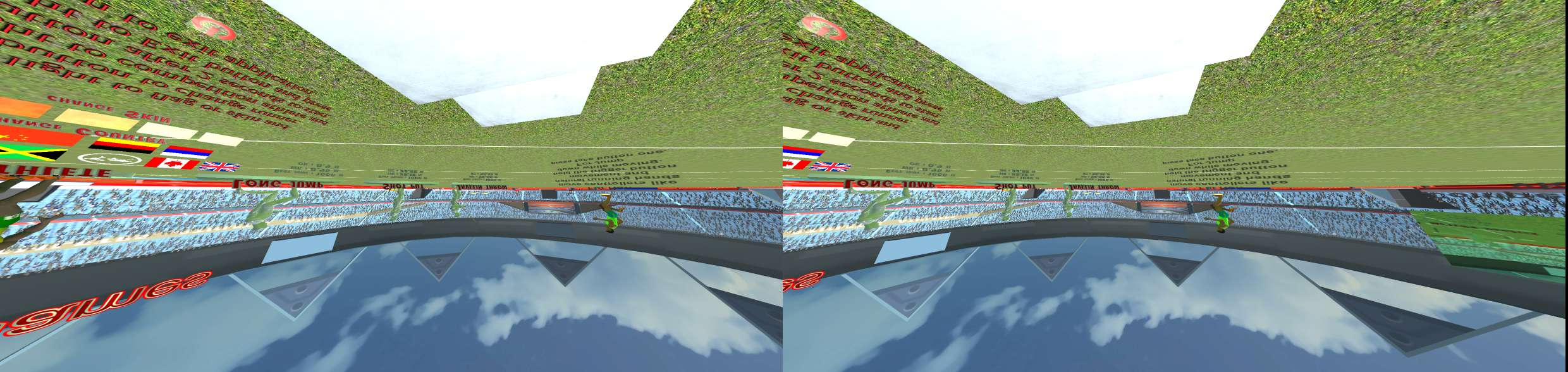

RGB Image for both eyes (JPG format, Same resolution to your VR Goggle)

-

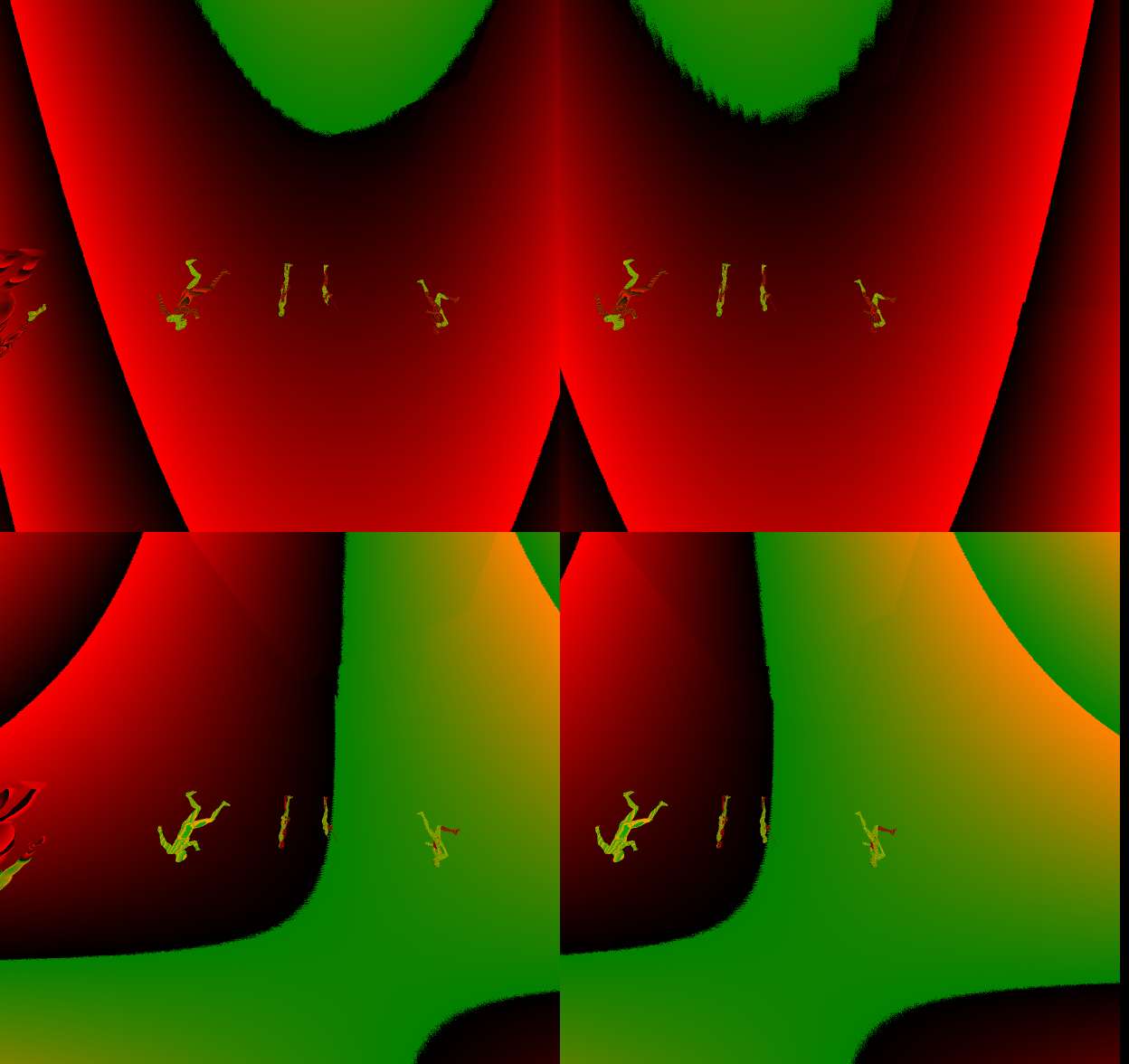

Motion Flow Image (JPG format. Its resolution has same width to your VR Goggle, but double the height since every motion vector is 2-dimensional).

-

Depth Image from the Main Camera (JPG format. Same resolution to your VR Goggle)

- Pose Information for VR Headset and Controllers

class TrackedDevicePose { // position in tracker space float[4][4] mDeviceToAbsoluteTracking; // velocity in tracker space in m/s float[3] vVelocity; // angular velocity in radians/s float[3] vAngularVelocity; }; - Controller Button/Trackpad Events

class VRControllerState { // bit flags for each of the buttons. uint64_t ulButtonPressed; uint64_t ulButtonTouched; // Axis data for the controller's analog inputs VRControllerAxis_t rAxis[ k_unControllerStateAxisCount ]; }; - Scene Object Data

class ObjectData { // Object Name public char[] name; // Object Boundary public float[6] bounds; // Model Matrix public float[4][4] localToWorldMatrix; } - Camera Data

class CameraData { // Camera Name public char[] name; // View Matrix public float[4][4] view; // Projection Matrix public float[4][4] projection; } - Light Source Data

class LightData { // Light Name public char[] name; // Light Materials (e.g., shadow & color) public float[13] Material; // Light Position Matrix public float[4][4] localToWorldMatrix; } - User Self Report From Microsoft Dial or Voice Commands.

For more detailed information, please refer to our Data Card.

Access To Dataset && License

You can download the current version of VR.net via this link. By downloading this dataset, you agree to our conditions and terms and the CC-BY-NC-SA license. Specifically, the material can be freely shared, redistributed, transformed, built upon and adapted for any purpose, but not for any commercial use. Anyone using the material must provide credit to the original authors and indicate any changes that were made. Anyone sharing the work or a derivative of it must do under the same license terms as it was originally shared. This prevents a derivative version being released under a more open licence that would allow commercial use.

Citation:

The recommend citation for this system is

@article{wen2023vr,

title={VR. net: A Real-world Dataset for Virtual Reality Motion Sickness Research},

author={Wen, Elliott and Gupta, Chitralekha and Sasikumar, Prasanth and Billinghurst, Mark and Wilmott, James and Skow, Emily and Dey, Arindam and Nanayakkara, Suranga},

journal={arXiv preprint arXiv:2306.03381},

year={2023}

}